Fighting AI With AI: Army Seeks Autonomous Cyber Defenses

Bloomberg Government subscribers get the stories like this first. Act now and gain unlimited access to everything you need to grow your opportunities. Learn more.

The U.S. Army is seeking information about “Autonomous Cyber” capabilities that use artificial intelligence and machine learning to defend its networks and protect its own intelligent systems against sophisticated cyberattacks. In other words, the Army wants to pit AI against AI in cyberspace.

A branch of the Army’s research and development enterprise known as the Space and Terrestrial Communications Directorate, or S&TCD, is seeking cybersecurity tools able to make “automated network decisions and defend against adaptive autonomous cyber attackers at machine speed,” according to a Jan. 14 solicitation.

The RFI reflects the Pentagon’s growing interest in algorithmic cybersecurity tools. Public and private sector organizations around the world are investing in intelligence and automation, to a varying degree, to compensate for the global shortage of trained cybersecurity analysts.

The Army envisions acquiring technologies that use machine learning to autonomously detect and address software vulnerabilities and network misconfigurations – routine mistakes that could offer attackers an entry point onto its systems.

Another reason organizations are turning to AI-powered cyber defenses: to counter the threat posed by intelligent cyber weapons. In February 2018, a group of more than two dozen researchers representing the Washington-based Center for a New American Security, the universities of Oxford and Cambridge, and nonprofit organizations including the Electronic Frontier Foundation and OpenAI, issued a groundbreaking report warning that AI technologies could amplify the destructive power available to nation-states and criminal enterprises.

The report outlines dozens of ways attackers could use artificial intelligence to their advantage, from generating automated spear-phishing attacks capable of reliably fooling their human targets, to triggering ransomware attacks using voice or facial recognition, to designing malware that mimics normal user behavior to evade detection.

Although there haven’t yet been confirmed cases of AI-enabled cyberattacks, the researchers conclude that, “the pace of progress in AI suggests the likelihood of cyber attacks leveraging machine learning capabilities in the wild soon, if they have not done so already.”

Combating ‘Adversarial’ AI

Pentagon officials appear to be taking the threat seriously. In Dec.11 testimony before the House Subcommittee on Emerging Threats, Pentagon chief information officer Dana Deasy said that researchers at the new Joint Artificial Intelligence Center (JAIC) are already working on ways to develop technologies to “detect and deter advanced adversarial cyber actors.”

The JAIC will play a key role alongside the Pentagon’s Research & Engineering (R&E) enterprise to “deliver new AI-enabled capabilities to end users as well as to help incrementally develop the common foundation that is essential for scaling AI’s impact across DoD,” Deasy said.

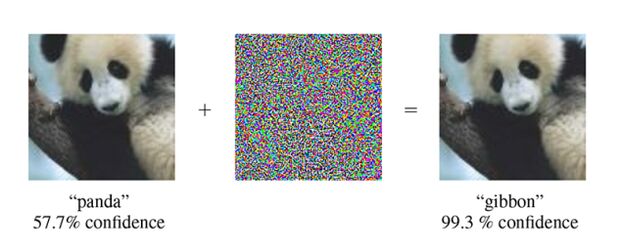

One of the JAIC’s first tasks is to organize the massive stockpiles of data that Pentagon agencies will use to train their machine learning algorithms and make the data more resilient to tampering, Pentagon Undersecretary for R&E Lisa Porter told lawmakers. Porter highlighted the risks posed by “adversarial AI” – attacks designed to trick intelligent systems into making mistakes – that could undermine trust in these systems.

“Adversaries have the ability to manipulate AI data and algorithms to the point where the AI system is defeated,” wrote Celeste Fralick, chief data scientist and senior principal engineer at the cybersecurity giant McAfee, in a recent op-ed. For example, attackers could target the Pentagon’s own intelligent cyber defenses with what’s known as a “black box” attack – reverse-engineering the defender’s algorithms in search of blind spots malware can slip through.

Similarly, said Fralick, machine learning systems are vulnerable to “poisoning” attacks, where a hacker injects false data into a training data set to bias the results of the algorithm. To use predictive maintenance as an example, the Air Force is investing heavily in machine learning tools to analyze flight records, maintenance logs, and sensor data in an effort to predict when parts will wear out or which aircraft will require maintenance on a given day. If adversaries are able to “poison” the predictive maintenance algorithm, the Air Force could, in theory, be forced to spend millions on redundant repairs. Worse, it might send unsafe aircraft out on duty.

Maintaining trust in these systems is central to the mission of the JAIC and the larger R&E enterprise, said Porter, noting that countering adversarial AI is a top priority for the Defense Advanced Research Project Agency’s five-year, $2 billion AI Next initiative.

The Army appears to be working toward the same objective in parallel. Its Autonomous Cyber solicitation calls for multiple tools and methodologies it can use to “red team” – or pressure test – its current AI-based cyber defenses and improve resistance to manipulation.

Interested parties have until Feb. 13 to respond to the RFI.

Chris Cornillie is a federal market analyst with Bloomberg Government.

To contact the analyst on this story: Chris Cornillie in Washington at ccornillie@bgov.com

To contact the editors responsible for this story: Daniel Snyder at dsnyder@bgov.com; Jodie Morris at jmorris@bgov.com

Stay informed with more news like this – the intel you need to win new federal business – subscribe to Bloomberg Government today. Learn more.