AI Disinformation Drives Lawmaker Fears About 2024 ‘Wild West’

- Democrats want to limit campaign AI; GOP slow to sign on

- Campaigns, super PACs already releasing deepfake ads

Bloomberg Government subscribers get the stories like this first. Act now and gain unlimited access to everything you need to know. Learn more.

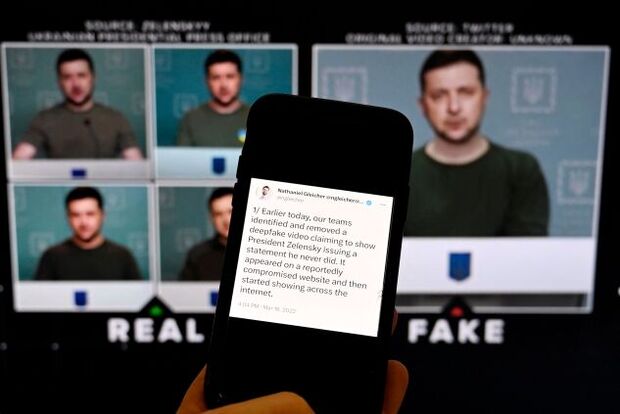

Lawmakers are running short on time and consensus to tackle the rise of deepfakes that threaten to manipulate the 2024 elections.

The increased use of artificial intelligence by campaigns has far surpassed how Congress, political parties, and federal regulators are addressing the technology. Without limits on deepfakes, fears that disinformation could shape the 2024 elections are expected to worsen as political ad spending ramps up and outpaces past election cycles.

“We are democratizing disinformation by allowing ordinary people to communicate in ways that could be completely fake,” said Darrell West, senior fellow at the Brookings Institution’s Center for Technology Innovation. “I expect that will be the Wild West of the 2024 election. There’s just going to be crazy content everywhere.”

Some Democrats have unveiled modest proposals to boost transparency around AI use in elections, but Republicans have been slow to sign on. Congress is still struggling to grasp the rapidly evolving technology. According to Senate Majority Leader Chuck Schumer, lawmakers are at least months away from introducing comprehensive legislation to mitigate AI’s most serious threats.

In the meantime, the speed, low cost, and efficiency with which generative AI tools can produce video, audio, and text are ripe for candidates to disseminate any bogus content to their base of potential voters almost instantly.

Video: The AI Dilemma: Can Laws Keep Up?

Take, for example, the Republican National Committee’s ad in April that showed an AI-generated apocalyptic future under President Joe Biden, or the June ad by Florida Gov. Ron DeSantis’ presidential campaign that featured deepfake images of former President Donald Trump embracing top infectious diseases expert Anthony Fauci, a frequent bogeyman of anti-vaccine supporters. Allied super PACs, flush with cash, are also experimenting with AI. The pro-DeSantis Never Back Down PAC this week released a TV ad featuring deepfake audio of Trump criticizing Iowa Gov. Kim Reynolds, whose state plays a crucial role in the Republican presidential primary.

“It would be absolutely terrible if this really important election gets decided based on disinformation,” West said.

Read More: AI Is Making Politics Easier, Cheaper and a Lot More Dangerous

Congress Far from AI Rules

Concerns over disinformation affecting elections aren’t new, but the recent AI boom has heightened them. Some experts predict that soon, 99% of content on the internet will be AI-generated. One study suggests humans may be more likely to believe AI-generated disinformation, based on deciphering fake versus real tweets.

The easiest and quickest option for Congress would be to require labeling on political advertisements that use AI, according to Matthew Ferraro, an attorney at WilmerHale who advises on technology and national security issues. Democratic Sens. Amy Klobuchar (Minn.), Michael Bennet (Colo.), and Cory Booker (N.J.), in May introduced a bill that would do just that (S. 1596). Rep. Yvette Clarke (D-N.Y.) has introduced companion legislation in the House (H.R. 3044).

Read More: AI Rules Must Balance Innovation, Safeguards, Schumer Says

Yet the bill hasn’t yet gained traction among Republicans.

“It’s a win, win, win all the way around, because it’s not as though Democrats are immune to the use of the technology,” Clarke said, adding that she is eager to get bipartisan support. “We want to make sure that there’s transparency across the board, so that everyone is aware when something has been fabricated by AI.”

While labeling is an important first step, Klobuchar said, Congress must go further.

“Some of it is going to have to be outright banned, or it’s going to completely fool our constituents on both sides of the aisle,” Klobuchar said at a Senate Democratic press conference on AI last week. She said she’s hoping to craft a bipartisan bill addressing AI in elections that would fit into a larger AI package, which Congress is working out.

Deliberative Approach

But lawmakers, still learning about AI’s benefits and dangers, are reluctant to hastily approve new rules that could stifle technological innovation.

Earlier: AI Threats Confront Eager Congress Grappling With Learning Curve

“We need to be thoughtful about this, engage around what the real threats might be, like any technology that can be used for a great force of good or for a force of evil,” said Sen. Steve Daines (R-Mont.), chairman of the National Republican Senatorial Committee. “I’m just always nervous when election officials start talking about creating knee-jerk reactions and more regulation.”

With AI a new topic in Congress, campaign policy may prove too narrow an issue to garner immediate action. When asked about AI use on the campaign trail, Sen. Gary Peters (D-Mich.), chair of the Democratic Senatorial Campaign Committee, said that his Homeland Security and Governmental Affairs Committee would soon hold a hearing “about deepfakes generally, not just with political campaigns.”

Other paths to regulate AI could be through a national privacy law that would promote algorithmic accountability and place limits on the collection of personal data, addressing several major concerns surrounding the technology. Bipartisan efforts to advance federal privacy legislation failed last year, though lawmakers are pushing it again.

“I feel so strongly that we need to be moving forward on privacy policy because that is the data that folks are using to feed systems,” said Rep. Suzan DelBene (D-Wash.), a leading proponent of a national privacy law and chair of the Democratic Congressional Campaign Committee. “That’s the beginning, because if we can start on privacy, then we can build on that to address many other issues in terms of technology.”

See also: Online Safety Measures for Children Face Broad Opposition Lobby

FEC, Campaign Action

Disinformation has proved equally difficult to tackle outside of Congress.

When the Biden administration last year tried to form a disinformation governance board, many Republicans viewed it as a means of censoring conservative viewpoints and fiercely condemned the move. The effort was ultimately disbanded.

Advocacy group Public Citizen in May petitioned the Federal Election Commission to regulate deepfake political ads, but the idea did not advance amid skepticism about whether the agency had jurisdiction over the matter. One Republican commissioner said to “take this up with Congress.” The group submitted a new petition last week.

See also: Deepfake Ads Strain Pre-AI Campaign Laws, Puzzling US Regulators

Congress, in turn, has asked the FEC to act. In a letter last week, 50 Democratic lawmakers urged the elections regulator to reconsider the Public Citizen proposal.

“When operating effectively and impartially, the Commission’s sole responsibility of administering and enforcing federal campaign finance law has the potential to protect the integrity of our elections,” wrote the coalition of lawmakers, led by Klobuchar, Sen. Ben Ray Luján (D-N.M.), and Rep. Adam Schiff (D-Calif.). “The FEC’s failure to act can however reduce transparency in our elections and undermine faith in our political system.”

The deadlock largely leaves 2024 campaigns to determine their own uses for AI.

The national Democratic and Republican campaign arms haven’t publicly set rules on how campaigns and staffers may use AI. The House of Representatives’ chief administrative officer, by contrast, notified House offices that they could only use Open AI’s ChatGPT and no other large language models for privacy and security reasons.

State and Voter Responsibility

In lieu of federal rules, states have been moving ahead. At least nine states, including California and Texas, have enacted policies to limit deepfake use in federal and state elections or pornography. Existing privacy, voting, and defamation laws also restrict how far campaigns may go to persuade voters, and those codes may apply to AI use, according to Ferraro, the attorney at WilmerHale.

Read More: States Are Rushing to Regulate Deepfakes as AI Goes Mainstream

“It feels like campaigns are acting as if it’s no holds barred,” Ferraro said. “I suppose it remains to be seen whether that’s actually true because we have state laws that are operative. We have federal laws in other contexts that might apply.”

Social media companies have taken some steps in recent elections to flag misleading or false content to users and remove disinformation on their platforms, though experts, lawmakers, and advocates have demanded they do more.

Ultimately, the onus is on voters to determine reality from fiction and educate themselves on the pervasiveness of AI, according to West, the Brookings fellow.

“People should be prepared for a very tough election,” West said. “The best thing they can do is to be aware of these new tools and how they can be misused.”

To contact the reporters on this story: Oma Seddiq at oseddiq@bloombergindustry.com; Amelia Davidson in Washington at adavidson@bloombergindustry.com

To contact the editors responsible for this story: George Cahlink at gcahlink@bloombergindustry.com; Anna Yukhananov at ayukhananov@bloombergindustry.com

Stay informed with more news like this – from the largest team of reporters on Capitol Hill – subscribe to Bloomberg Government today. Learn more.